Sorry to everyone for how long it took me to get this write-up out. The perils of starting a new job, I guess. Luckily, we had plenty of notes taken for the entire week, so very little was lost to the black hole that is my memory.

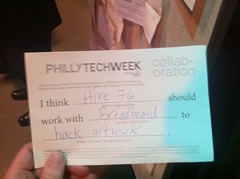

Our arrangements for Philly Tech Week were pretty impromptu, but we managed to pull off a number of fun things.

Monday, 25th: Open Work Night

Open Work Night turned out to be an extension of our spring cleaning from over the weekend. We got the space nice and tidy for everyone who would be visiting later in the week. One visitor came by and helped us put together a few shelves, which was incredibly handy as they required some “lite modification” with a hacksaw before they would fit in our ceilings. Oh, I know! Our ceilings are freaking tall, what was up with the shelves?

Tuesday, 26th: Micro-controller Show and Tell

The evening had a pretty light showing as people hadn’t really quite caught on to what we were doing. However, some of our members (Mike, Chris, and PJ) did get a start on a mirror-and-laser text display system. Very cool.

Wednesday, 27th: Regularly Scheduled Open House + Late Night Karaoke

On Wednesday night, we hosted a number of guests for what is normally our Open House night. These normally turn into social gatherings of sorts, and Tech Week was no exception. We found out that one of our guests is getting ready to launch a new social networking site, another who has started a vending machine company focusing on local goods (http://snacklikealocal.com), and another kind soul looking to donate a Smithy Lathe!

PJ got his MIDI Nintendo Running pad working. Basically, the old running pad controller used with the NES is interpreted through an Arduino to send MIDI signals back to a host computer, where it is used with any MIDI capable software, in this particular case Ableton Live.

We did get Late Night Karaoke going, and it was a blast. Adam rocked out with the Darkness’ “I Believe in a Thing Called Love”. Sean sang Gershwin’s “Foggy London Town”. PJ wooed everyone with The Temptations’ “My Girl”. Corrie set us all rolling laughing with Gayla Peevey’s “I Want A Hippopotamus For Christmas”. Chris fiiiiinaly got up to sing Soul Survivor’s “Expressway To Your Heart”. And Brendan was Brendan with Rick Astley’s “Never Gonna Give You Up”.

Thursday, 28th: DIY/Electronic Music

We had a good mix of newcomers and members for our music night. One person brought in a completely hand-made, 7 sting electric guitar he built. The thing was sick, really wish we had gotten pictures. We jammed out with various synths and drum machines. Sean further extended his Atari Punk Console with a low-pass filter to give it a rounder tone, then blipped and buzzed along with everyone else. Brendan rocked out on the guitar, and Dan was really tearing it up on the keyboard. Definitely a fun night, and we will be looking to do more such nights in the future.

Friday, 29th: “Bricks and Grips” – Arm Wrestling/Puzzle Game Tournament

This night, we actually had more guests than members show up. The first two challengers for Arm Wrestling Tetris were Sean McBeth (the creator) and Robert Cheetham, founder and president of Azavea, a GIS software firm in Center City that is doing some extremely revolutionary work (I know, I used to work in the industry). We also had a bit more electric music jamming, which was a great time.

It was a real team effort getting the game together, between Brendan’s sound track, PJ’s voice over work, and Sean’s programming and construction, it all fit together perfectly. Next up, Punching Bag Double Dragon!

Saturday, 30th: Artemis Game Session

The developer of Artemis just released a new version that includes canned missions. We played the first mission with Sean as Captain and survived to tell the tale.

While en route to our primary mission objective of observing anomolies in a nearby nebula cluster, we encountered a squadron of Krellians lying in wait, having prepared for an ambush against us. Lt. Commander Santoro showed great skill and initiative in destroying the three ships in mere seconds with two well-placed nuclear torpedoes.

After the brief battle, we intercepted a distress call from Deep-Space 49 as they took fire from another battle group of Krellians. Running low on energy and weapons, we barely scraped by and defended the station after a core-burning sprint at maximum warp that nearly left us depleted of energy. Lt. Peterson performed admirably in her duties managing power levels and surely is responsible for our survival.

DS-49 provided us with much needed supplies as we returned to our primary mission: scanning nebulae. We returned to the cluster to find another hidden flotilla of Krellians. This time, we were completely out of nukes and were unable to deal with them handily as we did before. We managed to warp out of weapons range before any serious damage came to the ship. Our second sortie against the Krellians fared better, we damaged them, but had not completely destroyed them. Running low on weapons, Commander Toliaferro performed commendably in maintaining a flanking position on the enemy, allowing Lt. Commander Santoro to dispatch the enemy with beam weapons.

Completely depleted of forward torpedoes, running low on energy, we were ambushed by a third squad of Krellians while under way to DS-45 for supplies. While we managed to warp into a nebula for cover, the nebula destroyed our shields and we were stuck with the enemy between us and our safety. Having nothing but mines left, Captain McBeth hatched a plan. We would fly through the center of the squadron, diverting repair crews and energy to protect critical systems as we bore the brunt of the frontal assault, then dropping our mines in the middle of the squad as we passed through them, to warp away to safety on the other side. The plan required a high level of coordination by all crew members. As Commander Toliaferro deftly navigated at close quarters through the heart of the beast, the first pass dealt great damage to the enemy, but they weren’t quite finished. Rather than coming about for another pass, Captain McBeth ordered all-stop in the middle of battle. Allowing the enemy to come in to weapons range, Lt. Commander Santoro dropped the last few mines, while Lt. Peterson delicately balanced the needs o the repair crews, shields, weapon systems, and engines largely under instinct, not having time to run the proper load balancing calculations. As a result, the final Krellian fleet was completely destroyed while the S.S. Artemis flew home under her own power, completely undamaged, back to DS-45.

Another mission accomplished.